|

Cybertelecom Federal Internet Law & Policy An Educational Project |

|

Content Delivery Networks |  |

|

|

© Cybertelecom ::In the 1990s, content would be hosted on a server at one "end" of the Internet and it would be requested by an individual at another "end" of the Internet. The content would move upstream through the content service's access provider and the access provider's backbone provider until it was exchanged with the viewer's backbone provider and then to the viewer's access provider. Each time the content was requested, it would make this full trip across the Internet. If there was "flash demand" for content, the demand could overwhelm capacity and result in congestion. In 1999, catastrophically demonstrating the problem of content delivery at that time, Victoria's Secret advertised during the Superbowl that it would webcast its fashion show. 1.5 million people attempted to view the Victoria's Secrets webcast, overwhelming the server infrastructure, resulting in a poor experience. [Adler] [Borland (1.5 million hits during Victoria’s Secret show, "many users were unable to reach the site during the live broadcast because of network bottlenecks and other Internet roadblocks.”)]

Tim Berners-Lee, in 1995, went before MIT and said, "This won't scale." MIT responded by inventing content delivery networks. [Akamai History] [Berners-Lee, The MIT/Brown Vannevar Bush Symposium (1995) (raising problem of flash demand and ability of systems to handle response)] [Mitra (quoting Tom Leighton, "Tim was interested in issues with the Internet and the web, and he foresaw there would be problems with congestion. Hot spots, flash crowds, … and that the centralized model of distributing content would be facing challenges. He was right.... He presented an ideal problem for me and my group to work on.")] [Berners-Lee] [Held 149] (For discussion of flash demand, see [Jung] [Khan]) A 1998 entrepreneurship competition at MIT resulted in Patent '703 which became Akamai [Akamai History] [Khan].

At about the same time, Sandpiper Networks developed its CDN solution and filed for its Patent '598. Prof. David Farber was one of the contributors to this Patent. Sandpiper began offering its "Footprint" CDN service in 1998.

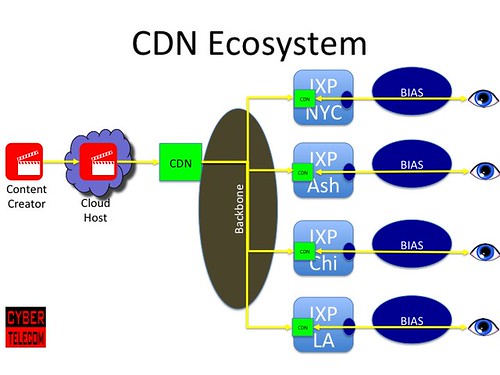

A Content Delivery Network (CDN) is an intelligent array of cache servers. [Charter TWC Merger Order para. 96] [What is a CDN, Akamai] The service analyzes demand and attempts to pre-position content on servers as close to eyeballs as possible. Instead of having content transmitted across the Internet each time it is requested, now content is delivered once, stored on a cache server at the gateway of an access network, and then served upon request. [Akamai Techs. Inc. v. Cable & Wireless Internet Servs., Inc., 344 F.3d at 1190-91] [Limelight Networks, 134 S.Ct. at 2115] The first generation of CDNs was focused on static or dynamic web documents; the second generation focused on video on demand and audio. [Khan]

The CDN is a win-win. The CDN offers to the content provider the advantages of transit cost-avoidance. Even though the content provider is now paying to deliver the content all the way across the backbones to the access network's gateway (instead of "half way" to the backbones peering point), the content provider is paying to deliver the content once or only a few times. Further, the content provider benefits from improved quality of delivery as the server is closer to the audience, the traffic bypasses interconnection congestion, and the traffic will be mixed with less cacophony of traffic. Likewise, the CDN offers to the access provider the advantage of transitcost avoidance and quality of service. The access provider had been paying transit in order to receive the content thousands of times; the CDN offers to provide the content directly to the access provider on a settlement-free basis. The access provider's subscribers will receive the content with a higher quality of service and be happy.

CDN's generally interconnect to other networks using the same protocols as other network interconnection (i.e., BGP). This is a "best effort" interconnection. Contrary to certain allegations, a CDN interconnection to an access network is not generally a "priority" interconnection. Traffic delivered from the CDN is treated the same as traffic delivered from other sources. The difference is that the CDN source is located at the gateway of the access network, closer to the eyeballs, outside the cacophonly of congested transit, and able to utilize deadicated capacity in a pipe between the CDN and the access network. In other words, its the same networking treatment but its better networking engineering. Richard Whitt, Network Neutrality and the benefits of Caching, Google Public Policy Blog, Dec. 15, 2008 ("Some critics have questioned whether improving Web performance through edge caching -- temporary storage of frequently accessed data on servers that are located close to end users -- violates the concept of network neutrality. As I said last summer, this myth -- which unfortunately underlies a confused story in Monday's Wall Street Journal -- is based on a misunderstanding of the way in which the open Internet works."); Scott Gilbertson, Google Blasts WSJ, Still Committed to Network Neutrality, WIRED Dec. 15, 2008

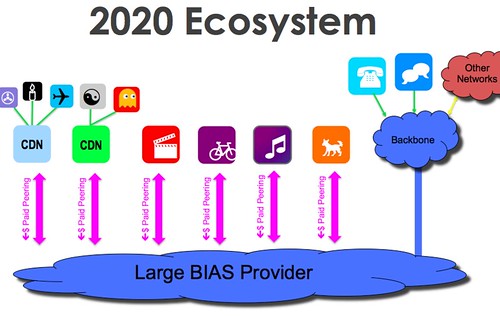

CDN's altered the interconnection ecosystem.

The old model was the movement of traffic from one end to the other, with traffic exchanged from source network to destination network at an interconnection point between backbones. The two ends of the ecosystem never negotiated directly with each other. Ends could acquire a simple transit arrangement and have full Internet access.

Now, CDNs move the traffic across the network and pre-position it at the gateway of the access network, generally at an IXP. Traffic has evolved from hot potato routing to cold potato routing, carrying the traffic from source to destination as far as possible. Large CDNs further improve the quality of their service by bypassing interconnection at the IXP and embedding their servers within the access networks. [Netflix Open Connect ISP Partnership Options (listing embedded CDN servers as first option)] [AT&T Info Request Response at 13 ("AT&T MIS services allows customers to choose the capacity of their connections and to deliver as much traffic to AT&T's network as those connections will permit. AT&T's MIS service is used by large content providers REDACTED, content delivery networks REDACTED, enterprises, and large and small businesses. AT&T's MIS service can be 'on-net- services or transit services. An on-net service provides access only to AT&T customers. Transit services are Internet Access Services in which AT&T will deliver traffic to virtually any point on the Internet (directly or through its peering arrangements with other ISPs). AT&T recently developed CIP service allows customers to collocate servers in AT&T's network at locations closer to the AT&T end users who will be accessing the content on those servers. CIP customers purchase the space, power, cooling, transport, and other capabilities needed to operate their servers in AT&T's network.")] CDN interconnection with BIAS providers is evolving from interconnecting at the 10 major peering cities, to closer, more regional interconnection, moving the content closer to eyeballs. [Nitin Roo, Bandwidth Costs Around the World, Cloudfare Aug 17, 2016 ("our peering has particularly grown in smaller regional locations, closer to the end visitor, leading to an improvement in performance. This could be through private peering, or via an interconnection point such as the Midwest Internet Cooperative Exchange (MICE) in Minneapolis..")] Multiple content sources negotiate directly with access networks for the right to interconnection, exchange traffic and access customers. Many interconnection transactions must occur. [Kang, Netflix CEO Q&A: Picking a Fight with the Internet Service Providers 2014 ("Then the danger is that it becomes like retransmission fees, which 20 years ago started as something little and today is huge, with blackouts and shutdowns during negotiations. Conceptually, if they can charge a little, then they can charge a lot, because they are the only ones serving the Comcast customers.")] The big question of interconnection has migrated from the core between backbones to the edge between a CDN and an access network.

The success of CDNs has off-loaded a tremendous amount of traffic from backbones. CDN's directly deliver over peering connections the majority of traffic to large BIAS providers' subscribers, and this statistic is trending up.

- Thomas Volmer, Google Global Cache - Enabling Content, Slide 4, AfPIF 2017 Abidjan ("African Peering Hubs and Google Global Cache: 70% of African traffic is exchanged in continent; 80% for Sub Saharan Africa.")

- Protecting and Promoting the Open Internet, Reply Comments of Verizon and Verizon Wireless, Dkt. 14-28, 58 (Sept. 15, 2014) (“In fact, today the majority of traffic destined for our end-user subscribers is delivered to Verizon over paid, direct connections with CDNs and large content providers, not over connections with our traditional, settlement-free peering partners.”);

- An Assessment of IP-interconnection in the context of Network Neutrality, Draft Report for public consultation, Body of European Regulators for Electronic Communications, p. 23, May 29, 2012 (60% of Google’s traffic is transmitted directly to tier 2 or 3 networks on a peering basis, bypassing backbones and transit);

- Craig Labowitz, The New Internet, Global Peering Forum, Slides 13 (April 12, 2016) (CDN as a percentage of peak ingress traffic. “In 2009, CDN helped to offload less than ¼ traffic. Most content delivered via peering / transit. By 2015, the majority of traffic is CDN delivered from regional facility or provider based appliance.” 2009: ~20%; 2011: ~35%; 2013: ~51%; 2015: ~61%);

- Nitin Roo, Bandwidth Costs Around the World, Cloudfare Aug 17, 2016 ("We peer around 40% of our traffic ... a significant improvement over two years ago. The share of peered traffic is expected to grow.")

- Aaron Gould, subj: noction vs border6 vs kentik vs fcp vs ?, NANOG listserv Jul 13, 2017 (45% traffic goes through CDNs; 55% goes over transit)

Both TeleGeography and Cisco have projected robust growth for North American metro and CDN network capacity, with no projected growth for long haul traffic.

- Cisco Visual Networking Index: Forecast and Methodology, 2014-2019, Cisco 7-8 (May 27, 2015) (forecasting 25% annual growth for North American metro capacity, 35% growth for CDN capacity, and 0% growth for long-haul capacity)

- IP Transit Revenues, Volumes Dependent on Peering Trends, TeleGeography (July 08, 2014) (projecting that on a global basis transit revenue will decline from $4.6B in 2013 to $4.1B in 2020

Backbone networks have evolved from being the heavy lifters, carrying all traffic (at some point) from one end to another - to becoming feeder networks delivering content to CDN servers once, with the CDNs doing the heavy lifting delivering requested content to eyeballs 1000s of times.

- Tony Tauber, Seeking Default / Control Plane WIE 2016: 7th Workshop on Internet Economics, Slide 3 (Dec. 2016) (quoting Geoff Houston, "We have a Tier1 CDN Feeder System")

- Geoff Houston, APNIC, Desperately Seeking Default, Slide 55, NANOG 68 2016 ("As long as all the feeder access networks can connect to Facebook, Google, Netflix, Ebay, Amazon... then nobody seems to care enough any more to to be motivated to fix this [lacks of anyone can connect to anyone]. What we now have in 2016 is a Tier 1 CDN feeder system.")

- Thomas Volmer, Google Global Cache - Enabling Content, Slide 5, AfPIF 2017 Abidjan ("Cache-fill traffic is a fraction of user facing traffic.")

- Geoff Huston, The Internet’s Gilded Age, CircleID Mar. 11, 2017,("The outlook is not looking all that rosy, and while it may be an early call, it's likely that we've seen the last of the major infrastructure projects being financed by the transit carriers, for the moment at any rate. In many developed Internet consumer markets, there is just no call for end users to access remote services at such massive volumes anymore. Instead, the content providers are moving their mainstream content far closer to the user, and it's the content distribution systems that are taking the leading role in funding any further expansion in the Internet's long-distance infrastructure.")

Generally, CDNs were able to negotiate settlement free peering arrangements with access providers. As the network evolved and large access providers grew in market power, CDNs began to pay Access Paid Peering to large access providers in order to deliver their content.

Derived From:Akamai Techs. Inc. v. Cable & Wireless Internet Servs., Inc., 344 F.3d 1186, 1190-91 (Fed. Cir. 2003)

"Generally, people share information, i.e., "content," over the Internet through web pages. To look at web pages, a computer user accesses the Internet through a browser, e.g., Microsoft Internet Explorer® or Netscape Navigator®. These browsers display web pages stored on a network of servers commonly referred to as the Internet. To access the web pages, a computer user enters into the browser a web page address, or uniform resource locator ("URL"). The URL is typically a string of characters, e.g., www.fedcir.gov. This URL has a corresponding unique numerical address, e.g., 156.119.80.10, called an Internet Protocol ("IP") address. When a user enters a URL into the browser, a domain name service ("DNS") searches for the corresponding IP address to properly locate the web page to be displayed. The DNS is administered by a separate network of computers distributed throughout, and connected to, the Internet. These computers are commonly referred to as DNS servers. In short, a DNS server translates the URL into the proper IP address, thereby informing the user's computer where the host server for the web page www.fedcir.gov is located, a process commonly referred to as "resolving." The user's computer then sends the web page request to the host server, or origin server. An origin server is a computer associated with the IP address that receives all web page requests and is responsible for responding to such requests. In the early stages of the Internet, the origin server was also the server that stored the actual web page in its entirety. Thus, in response to a request from a user, the origin server would provide the web page to the user's browser. Internet congestion problems quickly surfaced in this system when numerous requests for the same web page were received by the origin server at the same time.

This problem is exacerbated by the nature of web pages. A typical web page has a Hypertext Markup Language ("HTML") base document, or "container" document, with "embedded objects," such as graphics files, sound files, and text files. Embedded objects are separate digital computer files stored on servers that appear as part of the web page. These embedded objects must be requested from the origin server individually. Thus, each embedded object often has its own URL. To receive the entire web page, including the container document and the embedded objects, the user's web browser must request the web page and each embedded object. Thus, for example, if a particular web page has nine embedded objects, a web browser must make ten requests to receive the entire web page: one for the container document and nine for the embedded objects.

There have been numerous attempts to alleviate Internet congestion, including methods commonly referred to as "caching," "mirroring," and "redirection." "Caching" is a solution that stores web pages at various computers other than the origin server. When a request is made from a web browser, the cache computers intercept the request, facilitate retrieval of the web page from the origin server, and simultaneously save a copy of the web page on the cache computer. The next time a similar request is made, the cache computer, as opposed to the origin computer, can provide the web page to the user. "Mirroring" is another solution, similar to caching, except that the origin owner, or a third party, provides additional servers throughout the Internet that contain an exact copy of the entire web page located on the origin server. This allows a company, for example, to place servers in Europe to handle European Internet traffic.

"Redirection" is yet another solution in which the origin server, upon a request from a user, redirects the request to another server to handle the request. Redirection also often utilizes a process called "load balancing," or "server selection." Load balancing is often effected through a software package designed to locate the optimum origin servers and alternate servers for the quickest and most efficient delivery and display of the various container documents and embedded objects. Load balancing software locates the optimum server location based on criteria such as distance from the requesting location and congestion or traffic through the various servers.

Load balancing software was also known prior to the '703 patent. For example, Cisco Systems, Inc. marketed and sold a product by the name of "Distributed Director," which included server selection software that located the optimum server to provide requested information. The server selection software could be placed at either the DNS servers or the content provider servers. The Distributed Director product was disclosed in a White Paper dated February 21, 1997 and in U.S. Patent No. 6,178,160 ("the '160 patent"). 1190*1190 Both the White Paper and the '160 patent are prior art to the '703 patent. The Distributed Director product, however, utilized this software in conjunction with a mirroring system in which a particular provider's complete web page was simultaneously stored on a number of servers located in different locations throughout the Internet. Mirroring had many drawbacks, including the need to synchronize continuously the web page on the various servers throughout the network. This added extra expenses and contributed to congestion on the Internet.

Massachusetts Institute of Technology is the assignee of the '703 patent directed to a "global hosting system" and methods for decreasing congestion and delay in accessing web pages on the Internet. Akamai Technologies, Inc. is the exclusive licensee of the '703 patent. The '703 patent was filed on May 19, 1999, and issued on August 22, 2000. The '703 patent discloses and claims web page content delivery systems and methods utilizing separate sets of servers to provide various aspects of a single web page: a set of content provider servers (origin servers), and a set of alternate servers. The origin servers provide the container document, i.e., the standard aspects of a given web page that do not change frequently. The alternate servers provide the often changing embedded objects. The '703 patent also discloses use of a load balancing software package to locate the optimum origin servers and alternate servers for the quickest and most efficient delivery and display of the various container documents and embedded objects.

. . .

C & W is the owner, by assignment, of the '598 patent. The '598 patent is directed to similar systems and methods for increasing the accessibility of web pages on the Internet. The '598 patent was filed on February 10, 1998, and issued on February 6, 2001. Thus the '598 patent is prior art to the '703 patent pursuant to 35 U.S.C. § 102(e). C & W marketed and sold products embodying the '598 patent under the name "Footprint." The relevant difference between the disclosure of the '598 patent and Akamai's preferred embodiment disclosed in the '703 patent is the location of the load balancing software. Akamai's preferred embodiment has the load balancing software installed at the DNS servers, while the '598 patent discloses installation of the load balancing software at the content provider, or origin, servers. The '598 patent does not disclose or fairly suggest that the load balancing software can be placed at the DNS servers. It is now understood that placement of the software at the DNS servers allows for load balancing during the resolving process, resulting in a more efficient system for accessing the proper information from the two server networks. Indeed, C & W later created a new product, "Footprint 2.0," the systems subject to the permanent injunction, in which the load balancing software was installed at the DNS servers as opposed to the content provider servers. Footprint 2.0 replaced C & W's Footprint product."

CDN Advantages

- Improved Performance QoE :: Both Content Creator and Acess Network Audience Benefits

- caching content close to eyeballs (geographically and topologically - reducing the number of network hops between content and audience)

- [Stocker] [Buyya p. 3] [Philips] [Kaufman Slide 19] [Higginbotham 2013 (discussing Sonic.net's motivation to agree to settlement free peering with Netflix in order to avoid transit costs).]

- Netflix Petition to Deny, MB Docket No.14-57, Comcast/TWC Merger, Attachment A at 3, (August 25, 2014) Ken Florance states, "A CDN provides value to a terminating access networks because the CDN places content as close as possible to that terminating access network's customers (consumers), decreasing the distance that packets need to travel. Placing content closer to consumers results in a higher-quality consumer experience than if the consumer had to call up content that is stored further away from the terminating access network.,"

- [Data Cache and Edge Placement, Equinix Oct 25, 2017] [Architecting for the Digital Edge 10A Playbook, Equinix ("By shortening the physical distance between global applications, data, clouds and people, IOA shifts the fundamental IT delivery architecture from siloed and centralized to interconnected and distributed.")]

- [Applications of Comcast Corp., Time Warner Cable Inc., Charter Communications, Inc., and SpinCo For Consent to Assign or Transfer Control of Licenses and Authorizations, Netflix Petition to Deny, MB Docket No.14-57, Declaration of Ken Florance para. 3 (Aug. 25, 2014) ("A CDN provides value to a terminating access networks because the CDN places content as close as possible to that terminating access network's customers (consumers), decreasing the distance that packets need to travel. Placing content closer to consumers results in a higher-quality consumer experience than if the consumer had to call up content that is stored further away from the terminating access network.")] [Buyya at 3]

- Bypassing cogestion; utlizing optimal capacity

- With sufficient network sensors, having intelligence on network weather conditions and ability to route accordingly

- Scalability; ability to respond to peak (flash) demand [Pathan p. 3] [Stocker p. 6]

- Transit Cost Avoidance :: Both Content Creator and Acess Network Audience Benefits

- [Stocker p. 6, 8]

- Netflix Petition to Deny, MB Docket No.14-57, Attach. A at 3 ("CDNs also can reduce the transit costs paid by terminating access networks (where such networks pay for transit), because more content is stored within or near the terminating access network and so does not need to be retrieved remotely. Because the information can be stored at multiple locations within the terminating access network, CDNs also can reduce the terminating access network's overall on-network traffic. And because information is stored within or close to the terminating access network and only needs to be refreshed periodically (which can be done at off-peak hours), CDNs can lower the transit costs paid by content providers as well.,")

- Cybersecurity (i.e., DDOS avoidance) [Ao-Jan Su, David R. Choffnes, Aleksandar Kuzmanovic, and Fabián E. Bustamante,, Drafting Behind Akamai: Inferring Network Conditions Based on CDN Redirections ("most CDNs rely on network measure- ment subsystems to incorporate dynamic network information on replica selection and determine high-speed Internet paths over which to transfer content within the network ")]

CDN Ecosystem

- Content Creator (Customer of CDN; Vendor to audience; Customer of an access network; pays for content creation)

- Cloud Service (Vendor to Content Creator where content is hosted and formatted; backend services also provided; customer to colocation place; customer to network services)

- Backbone network (Vendor to Cloud Service, CDN Service, and Access Network; customer to IXP; customer to upstream networks) - transmitting data between each firm in the ecosystem

- Content Delivery Network (Vendor to Content Creator; partner (or customer) with access network; customer to colocation place; customer to backbone network)

- Note that a CDN is not a network itself. A CDN is a content distribution service that moves content to an array of cache servers. A CDN service may use third party backbone services, its own backbone service, or, if an embedded cache, the network services of the access network. [Kaufman ("Akamai (non-network CDNs) do not have a backbone, so each IX instance is independent. Akamai uses transit to pull content into servers.")] [Compare Stocker p. 6 (describing CDNs as an "overlay" network)]

- CDN Intelligence which manages network and content distribution, analyzing demand for content, network weather conditions, and cost efficiencies

- Some CDN's utilize audience's software to monitor QoE and report back so that CDN can revise transmission strategy

- Types of Cached Content: [Stocker p. 7]

- Static Content

- Mixed Content

- Personalized Content

- Some static content; some live content (most commercial websites; like a bank site)

- Content from multiple sources (news media sources with news content, advertisements, metrics, social engagement)

- Examples: Social media, Facebook

- Software updates: generally same update to a wide audience, but the updates are regularly revised

- Dynamic Content

- Dynamic content

- Dynamic audience

- CDN cache servers

- Location (Deployed to maximize quality of delivery of traffic to audience while maximizing cost reductions)

- Embedded servers, in access networks, closest to audience (ex. Akamai, Google, Netflix)

- Peer-to-Peer: Servers are end-users home computers

- Thomas Volmer, Google Global Cache - Enabling Content, Slide 5, AfPIF 2017 Abidjan ("Google Global Cache is hosted by ISPs. GGCs are machines inside ISP networks (and do not "peer")."); Declaration of Scott Mair, Senior Vice President of Technology Planning and Engineering, AT&T Services, Inc, MB Docket No. 14-90. ¶ 17 (Oct. 15, 2014), ("Content Interconnect Platform (“CIP”). AT&T has developed an Internet Access Service specifically for CDNs. AT&T’s CIP service allows customers to collocate servers in AT&T’s network at locations closer to the AT&T end users who will be accessing the content on those servers. CIP customers purchase the space, power, cooling, transport, and other capabilities needed to operate their servers in AT&T’s network")

- Major and smaller IXP deployment, placing servers at gateways of access networks, close to audience

- Major IXP deployment, placing servers in major IXP cities, creating robust deployment

- Few cache servers, smaller number of strategically placed servers, generally in IXPs

- Content Feed :: Content from source to cache servers over backbone networks

- Where cache servers are located at IXPs, generally the CDN arranges and pays for backbone service from content source to cache servers

- Where cache servers are embedded in an access network, generally the access network covers the cost of the backbone service from content source to cache servers

- Some access networks have complained that this expense can be greater than the cost savings, generally because the CDN is moving large amounts of traffic to the edge cache server

- Interconnection :: Content Delivery from cache server to audience

- Indirect Interconnection :: Traffic travels from cache server over a third party transit service to the audience's access network. This can be true even if the cache server, the transit service's router, and the access network's router are located in the same IXP a few feet from each other.

- Direct Connection :: Cache server is directly connect to the access network, delivering localized traffic to the audience on the access network

- Financial Arrangement

- Settlement Free Peering: Generally when CDNs interconnect with access networks at IXPs, it is done one a settlement free basis. Most interconnection arrangements, by count of individual arrangements, are settlement free peering

- Incentives: The CDN's customer is the content source; it has an incentive to deliver quality content to the audience at the lowest cost. The access network's customer is the audience; it has an incentive to provide for the audience quality access to content at the lowest cost (avoiding transit fees). With these incentives aligned, the firms agree to settlement free peering. [Stocker p. 9 ("Consequently, CDNs and access ISPs are in a symbiotic relationship. Both play a critical role in determining the end-users’ QoE when consuming content.")]

- Criteria: Generally a CDN will agree to peer with an access network when the access network is present at the IXP and the access network is receiving at least a minimum amount of traffic.

- Access Paid Peering: Large access networks have been able to leverage their market positions and charge "access paid peering" to CDNs in order for the CDN to directly connect and deliver traffic

- Logistics: The CDN is physically present at the IXP as a cache server. The access network is physically present at the IXP as a router. Interconnect can be achieved

- directly through a cross-connect, a short fiber strung across the colocation space, plugged into ports at each box. These cross connects are short and generally cost a few hundred dollars per month paid to the IXP.

- publicly through the IXP provided public router

- Cold Potato Routing: Unlike historic peering which engages in hot potato routing, CDNs practice cold potato routing, holding on to the content as long as possible, carrying the content as close to the eyeball as possible, and baring the full cost of moving content across the Internet.

- Internet eXchange Points / Colocations Spaces / Data Centers (Vendor to cloud service, CDN service, backbone network, and access network)

- Access Network (Vendor to audience; partner (or vendor) with CDN; potential customer to backbone network; customer of IXP)

- Audience / End User (customer to access network; customer to content creator)

- Types of Audience

- Wide audience: same content is served to a large audience

- Personalized Audience: unique content is served to each individual members of the audience

Process

- Receive Content from Source(s)

- Distribute Content to Cache Servers

- Replicate content (including encoding and DRM)

- Analyze

- Demand for content

- Internet weather report (quality of service, DDOS)

- Least cost routing

- Distribute content (over backbone services) to strategically placed localized cache servers close to audience

- Client Mapping: Mapping of end user / audience to an appropriate cache server

- DNS Redirection: CDN's DNS server returns IP addresses of preferred cache servers

- Anycast: CDN's DNS server returns mutliple IP addresses; ISP's LDNS selects prefered BGP path

- Respond to end-user demand

- Receive end-user requests for content (execute appropriate personalization; provide analytics to source)

- Direct end-user request to appropriate cache server (closest server, network traffic, load balancing, content resolution)

- Serve content to end-user

- Revise analytics based on demand

[Stocker p. 6-7]

Business Plan

- Commercial, public, carriers third party content

- White label: commercial CDN service resold by third party provider

- CDN Confederations

- Private, optomized for one service or company

- Interconnection

- Generally the CDN customer is the content creator

- The CDN is in the business of delivering content and has an incentive to be widely and robustly interconnected with the audience

- Therefore generally CDNs have interconnected with access networks on a settlement free peering basis - creating an incentive for the access networks to move off of transit and interconnect with CDNs

- Ivan Philips, Costs of Content Delivery: WIE 2013 San Diego

- An Assessment of IP-interconnection in the context of Network Neutrality, Draft Report for public consultation, Body of European Regulators for Electronic Communications, p. 17 & 35, May 29, 2012 (“In Q4 2011 CDN prices declined by 20 % (vs. Q4 2010). The corresponding price decline figure for 2010 and 2009 were 25 % resp. 45 %.”).

- Boris Tulman, Akamai Technologies ("charges site owners for the aggregate amount of data delivered to end users").

- CDN Calculator, 3xScreen Media (2013) (UK) ("CDN charges vary dramatically depending on the volume of video generated. High volume CDN users typically pay $0.06 per GB or less for delivery by the leading CDNs whilst average volume users pay $0.25/GB or more. Second and third tier CDNs generally charge less.

Timeline

2014

- Netflix / Verizon interconnection agreement

- Netflix / Comcast interconnection agreement

- Cogent (Netflix) / Comcast interconnection dispute

- Verizon acquires Edgecast

2012

- Netflix launches CDN OpenConnect

2011

2010

- Netflix releases its video streaming service as a stand alone product

2008

- Amazon CDN launched. [Candric]

2007

- Netflix launches video streaming service, will use Akamai and Limelight

2005

- Akamai a quires Speedera; has 80% market share. [Tulman]

2004

- Akamai and Limelight discuss a potential merger; merger is not pursued. [614 F.Supp.2d 90]

2001

- Limelight begins to offer CDN service. [614 F.Supp.2d 90]

- 9/11 Flash Demand event

2000

- Patent office issues CDN patent '703 to MIT

1999

- Victoria Secrets Webcast advertised during Superbowl. 1.5 million people attempted to watch show. Many people had trouble observing the show. Victoria Secrets then went to Akamai to improve video deliver. [Rob Frieden] [Borland 1999]

- Akamai goes public

1998

- Akamai was formed in 1998 out of an Entrepreneurship Competition at MIT. Company History, Akamai.

- September: Sandpiper introduces "Footprint" CDN service [614 F.Supp.2d 90]

- July 14: Akamai files provisional application for Patent '703. [614 F.Supp.2d 90]

- February 10: Sandpiper Networks, Inc. files patent application for content delivery system. Patent No. 6,185,598 (issued February 6, 2001) ("Farber Patent") [614 F.Supp.2d 90]

Patents

- Christopher Newton, Laurence Lipstone, William Crowder, Jeffrey G. Koller, David Fullagar, Maksim Yevmenkin (Level 3), Content Delivery Network Patent WO2013090699 A1 June 20, 2013 ("A content delivery network (CDN) includes a control core; and a plurality of caches, each of said caches constructed and adapted to: upon joining the CDN, obtain global configuration data from the control core; and obtain data from other caches. Each of the caches is further constructed and adapted to, having joined the CDN, upon receipt of a request for a particular resource: obtain updated global configuration data, if needed; obtain a customer configuration script (CCS) associated with the particular resource; and serve the particular resource in accordance with the CCS.")

- U.S. Patent No. 7,949,779 titled "Controlling Subscriber Information Rates In A Content Delivery Network," filed 10/31/2007, issued 05/24/2011

- U.S. Patent No. 7,945,693 titled "Controlling Subscriber Information Rates In A Content Delivery Network," filed 10/31/2007, issued 05/17/2011

- U.S. Patent No. 8,015,298 titled "Load-Balancing Cluster," filed 02/23/2009, issued 09/06/201 1

- U.S. Patent No. 7,860,964 titled "Policy-Based Content Delivery Network Selection," filed 10/ 26/2007, issued 12/28/2010

- U.S. Published Patent Application No. 2010-0332664 titled "Load- Balancing Cluster," filed 09/13/2010.

- U.S. Published Patent Application No. 2010-0332595 titled "Handling Long-Tail Content In A Content Delivery Network (CDN)," filed 09/13/2010

- U.S. Published Patent Application No. 2009-0254661 titled "Handling Long-Tail Content In A Content Delivery Network (CDN)," filed 03/21/2009

- U.S. Patent No. 7,054,935 titled "Internet Content Delivery Network," filed 03/13/2002, issued 05/30/2006

- U.S. Patent No. 6,654,807 titled "Internet Content Delivery Network," filed 12/06/2001 , issued 1 1/25/2003

- David Farber, Sandpiper Networks, Inc., U.S. Patent No. 6,185,598 titled "Optimized Network Resource Location," filed 02/10/1998, issued 02/06/ 2001

GF. Thomson Leighton, Daniel M. Lewin, Global Hosting System, Patent US6108703 A (issued Aug. 22, 2000) (MIT / Akamai exclusive licensee) ("The present invention is a network architecture or framework that supports hosting and content distribution on a truly global scale. The inventive framework allows a Content Provider to replicate and serve its most popular content at an unlimited number of points throughout the world. The inventive framework comprises a set of servers operating in a distributed manner. The actual content to be served is preferably supported on a set of hosting servers (sometimes referred to as ghost servers). This content comprises HTML page objects that, conventionally, are served from a Content Provider site. In accordance with the invention, however, a base HTML document portion of a Web page is served from the Content Provider's site while one or more embedded objects for the page are served from the hosting servers, preferably, those hosting servers near the client machine. By serving the base HTML document from the Content Provider's site, the Content Provider maintains control over the content.")

Commercially Available CDN Services

- Akamai. 147,000 servers worldwide in 2000 locations, in 92 countries and 700 cities. Partner with 1200 networks. Delivers 15-30% of web traffic. Akamai's customers include NBC, Toyota, FedEx, Apple, MLB, and Travelocity.

- Christian Kaufmann, Akamai Technologies, BGP and Traffic Engineering with Akamai, MEMOG 14;

- Akamai, Customers, Customer List. Company History, Akamai

- Company History, Akamai

- Akamai History, AKAMAI

- [Ao-Jan Su, David R. Choffnes, Aleksandar Kuzmanovic, and Fabián E. Bustamante,, Drafting Behind Akamai: Inferring Network Conditions Based on CDN Redirections ("most CDNs rely on network measure- ment subsystems to incorporate dynamic network information on replica selection and determine high-speed Internet paths over which to transfer content within the network ")]

- Amazon

- Amazon CDN was launched in 2008. Goran Candric, The History of Content Delivery Networks, Globadots (Dec. 21, 2012).

- Limelight

- Level3 (acquired CDN service from SAVVIS)

- Content Delivery Networks, Level3

- Rackspace

- Tata

BIAS providers offering CDN service

- AT&T

- Content Distribution. AT&T (reselling Akamai)

- Dan Rayburn, Comcast Launches Commercial CDN Service Allowing Content Owners to Deliver Content Via the Last Mile, Streaming Media Blog (May 19, 2014) ("AT&T has already given up on their own internal CDN and simply resells Akamai.");

- Dan Rayburn, Inside the Akamai and AT&T Deal and Why Akamai May Have Paid Too Much, Streaming Media Blog, (Dec. 10, 2012);

- AT&T CDN service launched in 2011.

- Goran Candric, The History of Content Delivery Networks, Globadots (Dec. 21, 2012)

- Jon Brodkin, How Comcast Became a Powerful - and Controversial - Part of the Internet Backbone, Ars Technica (Jul. 17, 2014)

- Centurylink: Content Delivery Network Service. Centurylink

- Comcast

- The Evolution of the Comcast Content Delivery Network, Comcast Voices Feb. 26, 2014

- Dan Rayburn, Comcast Launches Commercial CDN Service Allowing Content Owners to Deliver Content Via the Last Mile, Streaming Media Blog (May 19, 2014) ("I expect some content owners to be able to pay 20%-40% less than what they pay now").

- Akamai: Customers: Case Studies

- Jon Brodkin, It's Not a "Fast Lane" But Comcast Built a CDN to Charge for Video Delivery, Ars Technica (May 19, 2014);

- NTT Communications Content Deliver Network (CDN) Service,

- Sprint

- Verizon | Edgecast

- Dan Rayburn, Comcast Launches Commercial CDN Service Allowing Content Owners to Deliver Content Via the Last Mile, Streaming Media Blog (May 19, 2014) ("In 2010, Verizon did a deal with HBO to deliver their content inside Verizon’s last mile for FiOS subscribers. This was due to the fact that Verizon had built out their own CDN, using the technology from Velocix, which was acquired by Alcatel Lucent. Verizon didn’t do many deals with content owners and ended up changing their CDN plans a few years back, but I do expect Verizon to once again enter the market and offer a similar service to Comcast’s, due to Verizon’s recent purchase of EdgeCast.")

- Content Delivery Network, Verizon.

- Ryan Lawler, Verizon is Acquiring Content Delivery Network Edgecast for more than $350 Million, TechCrunch (Dec. 7, 2013);

- Jon Brodkin, How Comcast Became a Powerful - and Controversial - Part of the Internet Backbone, Ars Technica (Jul. 17, 2014);

- Verizon Plans to Acquire Edgecast Networks, Verizon Press Release (Dec. 9, 2013);

- Key Acquisitions Position Verizon Digital Media Services as One-Stop Shop, Verizon Press Release Jan. 6, 2014

Proprietary CDNs

- Apple

- Dan Rayburn, Apple’s CDN Now Live: Has Paid Deals with ISPs, Massive Capacity in Place, Streaming Media Blog (July 31, 2014);

- Jon Brodkin, Apple Reportedly Will Pay ISPs for Direct Network Connections, Ars Technica (May 20, 2014) ('the company is negotiating paid interconnection deals with "some of the largest ISPs in the US" in order to deliver Apple content to consumers . . . ' According to Rayburn, "Microsoft, Google, Facebook, Pandora, Ebay, and other content owners that have already built out their own CDNs" are also paying ISPs for interconnection.').

- Shara Tibken, Apple Said to build its own Content Delivery Network, CNET (Feb. 3, 2014)

- eBay

- Jon Brodkin, Apple Reportedly Will Pay ISPs for Direct Network Connections, Ars Technica (May 20, 2014) ('the company is negotiating paid interconnection deals with "some of the largest ISPs in the US" in order to deliver Apple content to consumers . . . ' According to Rayburn, "Microsoft, Google, Facebook, Pandora, Ebay, and other content owners that have already built out their own CDNs" are also paying ISPs for interconnection.').

- Stacey Higginbotham, Like Netflix, Facebook is Planning its Own CDN, Gigaom (Jun. 21, 2012)

- Jon Brodkin, Apple Reportedly Will Pay ISPs for Direct Network Connections, Ars Technica (May 20, 2014) ('the company is negotiating paid interconnection deals with "some of the largest ISPs in the US" in order to deliver Apple content to consumers . . . ' According to Rayburn, "Microsoft, Google, Facebook, Pandora, Ebay, and other content owners that have already built out their own CDNs" are also paying ISPs for interconnection.').

- Google Youtube

- An Assessment of IP-interconnection in the context of Network Neutrality, Draft Report for public consultation, Body of European Regulators for Electronic Communications, p. 23, May 29, 2012 (60% of Google’s traffic is transmitted directly to tier 2 or 3 networks on a peering basis, bypassing backbones and transit)

- Jon Brodkin, Apple Reportedly Will Pay ISPs for Direct Network Connections, Ars Technica (May 20, 2014) ('the company is negotiating paid interconnection deals with "some of the largest ISPs in the US" in order to deliver Apple content to consumers . . . ' According to Rayburn, "Microsoft, Google, Facebook, Pandora, Ebay, and other content owners that have already built out their own CDNs" are also paying ISPs for interconnection.').

- Microsoft

- Netflix OpenConnect

- Announcing the Netflix Open Connect Network, Netflix (June 4, 2012).

- Pandora

- Jon Brodkin, Apple Reportedly Will Pay ISPs for Direct Network Connections, Ars Technica (May 20, 2014) ('the company is negotiating paid interconnection deals with "some of the largest ISPs in the US" in order to deliver Apple content to consumers . . . ' According to Rayburn, "Microsoft, Google, Facebook, Pandora, Ebay, and other content owners that have already built out their own CDNs" are also paying ISPs for interconnection.').

Government Activity

- An Assessment of IP-interconnection in the context of Network Neutrality, Draft Report for public consultation, Body of European Regulators for Electronic Communications, p. 17 & 35, May 29, 2012 (“In Q4 2011 CDN prices declined by 20 % (vs. Q4 2010). The corresponding price decline figure for 2010 and 2009 were 25 % resp. 45 %.”).

Caselaw

- Limelight Networks v Akamai Technologies, 572 US __ (2014) ("Limelight could not have directly infringed the patent at issue because performance of the tagging step could not be attributed to it. ")

- Review Fed. Cir. en banc. (reversed the reversal; LL is liable) ("evidence could support a judgment in [respondents’] favor on a theory of induced infringement” under §271(b). 692 F. 3d 1301, 1319 (2012) ( per curiam ).")

- 629 F.3d 1311, 1321 (CA Fed. 2010) (reversal affirmed)(" defendant that does not itself undertake all of a patent’s steps can be liable for direct infringement only “when there is an agency relationship between the parties who perform the method steps or when one party is contractually obligated to the other to perform the steps.” ")

- Reconsideration in light of Muniauction. (reversed, LL cannot be liable in light of Muniauction)

- 614 F. Supp. 2d 90 (Mass. 2009)

- Jury Trial ("On February 28, 2008, a jury found that Limelight infringed claims 19-21 and claim 34 of the '703 Patent and that none of the infringed claims were invalid due to anticipation, obviousness, indefiniteness, lack of enablement or written description. The jury awarded Akamai damages of $41.5M based on lost profits and reasonable royalty from April 2005 through December 31, 2007, plus prejudgment interest, along with price erosion damages in the amount of $4M.")

- 2006 lawsuit filed

- Akamai Techs. Inc. v. Cable & Wireless Internet Servs., Inc., 344 F.3d 1186, 1190-91 (Fed.Cir.2003) (patent litigation)

- Akamai v Speedera Networks, Inc. (filed February 2002) (settled) [614 F.Supp.2d 90]

Papers & Presentations

- JING’AN XUE1, DAVID CHOFFNES2, AND JILONG WANG, CDNs Meet CN: An Empirical Study of CDN Deployments in China IEEE 2017

- An assessment of IP interconnection in the context of Net Neutrality, BEREC Report, Start p. 47 (Dec. 6, 2012) (Sec. 4.4.4 Increasing Role of CDNs).

- Tim Berners-Lee, The MIT/Brown Vannevar Bush Symposium (1995) (video archive) (raising the problem of flash demand and the ability of systems to handle the response)

- Buyya, R.; Pathan, A.-M.K.; Broberg, J.; Tari, Z., "A Case for Peering of Content Delivery Networks," Distributed Systems Online, IEEE , vol.7, no.10, pp.3,3, Oct. 2006 doi: 10.1109/MDSO.2006.57

- Tom Coffeen, Limelight, IPv6 CDN, NANOG 46

- Cisco Visual Networking Index: Forecast and Methodology, 2012–2017

- Blake Crosby, CBC, 100 Terabytes a Day: How CBC Delivers Content to Canadians, NANOG 55 (June 3, 2012) (good discussion of use of CDN)

- Gilbert Held, A Practical Guide to Content Delivery Network, 2nd Edition (2010)

- J. Jung, B. Krishnamurthy, and M. Rabinovich, “Flash Crowds and Denial of Service Attacks: Characterization and Implications for CDNs and Web Sites,” In Proceedings of the International World Wide Web Conference, pp. 252-262, May 2002.

- Christian Kaufmann, Akamai Technologies, BGP and Traffic Engineering with Akamai, MEMOG 14 (2012) [Kaufman]

- Sramana Mitra, Speeding up the Internet: Algorithms Guru and Akamai Founder Tom Leighton, One Million by One Million (Oct. 17, 2007)

- Stocker, Volker; Smaragdakis, Georgios; Lehr, William; Bauer, Steven (2016) : Content may be King, but (Peering) Location matters: A Progress Report on the Evolution of Content Delivery in the Internet, 27th European Regional Conference of the International Telecommunications Society (ITS), Cambridge, United Kingdom, 7th - 9th September 2016, International Telecommunications Society (ITS), Cambridge, UK [Stocker]

- NANOG 55 CDN Panel 2012

- Al-Mukaddim Khan Pathan and Rajkumar Buyya, A Taxonomy and Survey of Content Delivery Networks (2006) ("A CDN is a combination of content-delivery, request-routing, distribution and accounting infrastructure") [Pathan]

- Ivan Philips, Costs of Content Delivery: WIE 2013 San Diego [Philips]

- Gregory Rose, The Economics of Internet Interconnection: Insights from the Comcast-Level3 Dispute, p. 5 (March 28, 2011) ("Among broadband access networks in the U.S., only Comcast deviates from the settlement-free model of peering. Comcast has always seen peering as a potential profit center. In recent F.C.C. filings Comcast has reported that both Akamai and Limelight, as well as other CDNs, have been paying settlement fees for access to Comcast subscribers, despite the near-universal practice of settlement-free peering. However, it is difficult to verify Comcast’s claim. ").

- Boris Tulman, Akamai Technologies

Statistics

- Percentage of data delivered from CDNs

- Craig Labowitz, The New Internet, Global Peering Forum, Slides 13 (April 12, 2016) (CDN as a percentage of peak ingress traffic. “In 2009, CDN helped to offload less than ¼ traffic. Most content delivered via peering / transit. By 2015, the majority of traffic is CDN delivered from regional facility or provider based appliance.” 2009: ~20%; 2011: ~35%; 2013: ~51%; 2015: ~61%)

- CDN market share in the Alexa top 1K Websites , Datanyze.

- Craig Labovitz, First Data on Changing Netflix and Content Delivery Market Share, deepfield (June 9th, 2012).

News

- Akamai Slashing Media Pricing In Effort To Fill Network, Won’t Fix Their Underlying Problem Streaming Meda 9/14/16 With Akamai’s top six media customers have moved a large percentage of their traffic to their own in-house CDNs over the past 18 months, Akamai has been scrambling to try to fill the excess capacity left on their network.

- Shara Tibken, Apple Said to build its own Content Delivery Network, CNET (Feb. 3, 2014).

- Cecilia Kang, Netflix CEO Q&A: Picking a Fight with the Internet Service Providers, Washington Post, July 11, 2014

- Stacey Higginbotham, Like Netflix, Facebook is Planning its Own CDN, Gigaom (Jun. 21, 2012)

- Stacy Higginbotham, Peering Pressure: The Secret Battle to Control the Future of the Internet, GigaOm (June 19, 2013)

- Goran Candric, The History of Content Delivery Networks, Globadots (Dec. 21, 2012).

- Dan Rayburn, CDNs Account for 40% of the Overall Traffic Volume Flowing Into ISP Networks, Streaming Media Blog (Oct. 8, 2012).

- Craig Labovitz, First Data on Changing Netflix and Content Delivery Market Share, deepfield (June 9th, 2012)

- Jon Brodkin, Apple Reportedly Will Pay ISPs for Direct Network Connections, Ars Technica (May 20, 2014) ('the company is negotiating paid interconnection deals with "some of the largest ISPs in the US" in order to deliver Apple content to consumers . . . ' According to Rayburn, "Microsoft, Google, Facebook, Pandora, Ebay, and other content owners that have already built out their own CDNs" are also paying ISPs for interconnection.').

- Dan Rayburn, Comcast Launches Commercial CDN Service Allowing Content Owners to Deliver Content Via the Last Mile, Streaming Media Blog (May 19, 2014) ("AT&T has already given up on their own internal CDN and simply resells Akamai.");

- Joan Engebrestson, Netflix / Comcast Deal Relies on Third Party Data Centers for Interconnect, Telecompetitor (Feb. 24, 2014) ("Additionally some providers, including Verizon, are accustomed to getting paid for providing content storage capability to website operators.")

- Dan Rayburn, Inside the Akamai and AT&T Deal and Why Akamai May Have Paid Too Much, Streaming Media Blog, (Dec. 10, 2012);

- Goran Candric, The History of Content Delivery Networks, Globadots (Dec. 21, 2012);

- Tim Siglin, What is a Content Delivery Network (CDN)?, Streaming Media.com (March 20, 2011).[Siglin]

- Latest Internet Traffic Stats: Google and CDNs Outmuscle Tier 1 Telcos, Telco2 (Oct. 20, 2009).

- Atlas Internet Observatory 2009 Annual Report, Slide 17, NANOG 2009

- John Borland, Net Video Not Yet Ready for Prime Time, CNET (Feb. 5, 1999) (1.5 m hits during show, "many users were unable to reach the site during the live broadcast because of network bottlenecks and other Internet roadblocks. ")

- S. Adler, “The SlashDot Effect: An Analysis of Three Internet Publications,” Linux Gazette Issue, Vol. 38, 1999